Kubernetes Security for Sysadmins

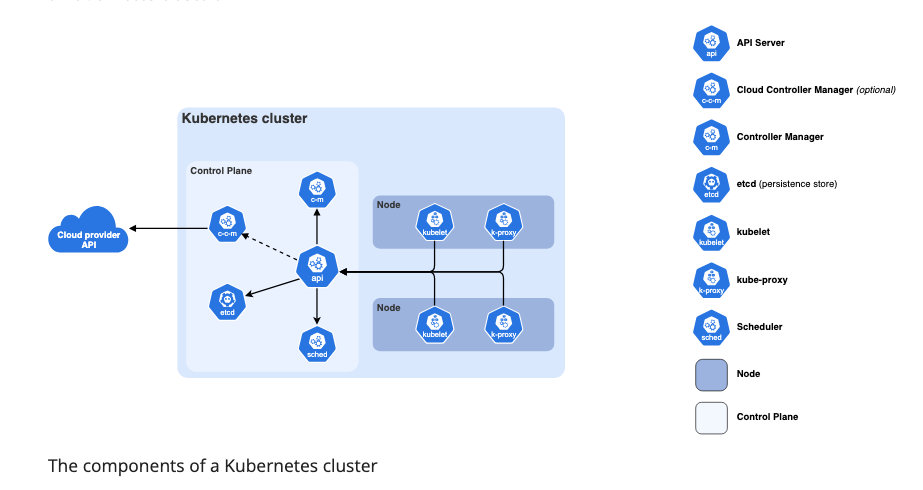

Securing Kubernetes can feel overwhelming. With so many components, from the API server to your nodes, each one is a potential attack vector. This guide skips the theory and delves into concrete steps you can take to lock down your clusters and understand why each measure matters.

Umegbewe Great Nwebedu

May 15, 2024

Published on

May 15, 2024

Copy link

https://everythingdevops.webflow.io/blog/building-and-verifying-self-hosted-images-using-kyverno-cosign-and-harbor

https://everythingdevops.webflow.io/blog/creating-and-enforcing-organization-wide-policies-with-octopus-platform-hub

https://everythingdevops.webflow.io/blog/kubernetes-security-for-sysadmins

https://everythingdevops.webflow.io/blog/blameless-postmortems-and-incident-analysis-for-sre-teams

https://everythingdevops.webflow.io/blog/rapidly-prototyping-distributed-systems-with-alis-exchange

https://everythingdevops.webflow.io/blog/managing-kubernetes-beyond-the-firewall-with-pull-mode

https://everythingdevops.webflow.io/blog/the-hidden-cost-of-decentralisation-smart-strategies-to-slash-your-gas-fees

https://everythingdevops.webflow.io/blog/safe-ci-cd-pipelines-a-devsecops-engineers-roadmap

https://everythingdevops.webflow.io/blog/provisioning-an-aws-landing-zone-with-opentofu-and-spacelift

https://everythingdevops.webflow.io/blog/securely-exposing-kubernetes-services-with-twingate

https://everythingdevops.webflow.io/blog/managing-ansible-secrets-with-infisical

https://everythingdevops.webflow.io/blog/leveraging-mempool-for-lightning-fast-blockchain-transactions

https://everythingdevops.webflow.io/blog/understanding-ssh-and-ssh-keys

https://everythingdevops.webflow.io/blog/fluentd-installation

https://everythingdevops.webflow.io/blog/kubernetes-management-mirantis-lens

https://everythingdevops.webflow.io/blog/fluentd-architecture

https://everythingdevops.webflow.io/blog/what-is-fluentd

https://everythingdevops.webflow.io/blog/kratix-the-open-source-platform-engineering-framework

https://everythingdevops.webflow.io/blog/azure-foundations-a-compliance-and-regulations-guide

https://everythingdevops.webflow.io/blog/managing-the-aws-cloud-secrets-the-best-possible-way

https://everythingdevops.webflow.io/blog/multi-cluster-with-cilium-on-civo-cloud

https://everythingdevops.webflow.io/blog/introduction-to-ebpf-and-cilium

https://everythingdevops.webflow.io/blog/build-and-push-docker-images-to-aws-ecr-using-github-actions

https://everythingdevops.webflow.io/blog/unlock-the-secrets-of-azure-seamless-authentication-with-external-secrets-operator-key-vault-and-workload-identity

https://everythingdevops.webflow.io/blog/hands-on-guide-to-distributed-tracing-encore-vs-opentelemetry

https://everythingdevops.webflow.io/blog/introduction-to-multi-clusters

https://everythingdevops.webflow.io/blog/overview-of-the-apple-m1-chip-architecture

https://everythingdevops.webflow.io/blog/is-apache-kafka-a-database

https://everythingdevops.webflow.io/blog/deploying-databases-on-digitalocean-with-crossplane

https://everythingdevops.webflow.io/blog/how-does-real-time-data-streaming-work-in-kafka

https://everythingdevops.webflow.io/blog/automating-azure-infrastructure-with-terraform-and-azure-devops

https://everythingdevops.webflow.io/blog/schema-evolution-in-kafka

https://everythingdevops.webflow.io/blog/how-to-setup-repositories-in-azure-devops

https://everythingdevops.webflow.io/blog/kafka-architecture-101

https://everythingdevops.webflow.io/blog/build-a-docker-image-and-push-to-docker-hub-a-quick-guide

https://everythingdevops.webflow.io/blog/when-do-you-need-a-container-vs-virtual-machines

https://everythingdevops.webflow.io/blog/open-sourcing-wasmcloud-operator-a-step-towards-community-driven-innovation-in-kubernetes-and-webassembly

https://everythingdevops.webflow.io/blog/how-to-checkout-git-tags

https://everythingdevops.webflow.io/blog/hypershield-ciscos-ai-powered-cloud-security-shakes-up-the-devops-world

https://everythingdevops.webflow.io/blog/what-is-amazon-resource-name-arn

https://everythingdevops.webflow.io/blog/first-multi-cloud-native-oss-platform-for-telco-industry

https://everythingdevops.webflow.io/blog/choosing-the-right-tool-for-your-local-kubernetes-development-environment

https://everythingdevops.webflow.io/blog/step-by-step-guide-creating-a-kubernetes-cluster-on-raspberry-pi-5-with-k3s

https://everythingdevops.webflow.io/blog/quick-installation-of-minikube-on-an-ubuntu-server

https://everythingdevops.webflow.io/blog/practical-guide-to-kubernetes-ingress-with-nginx

https://everythingdevops.webflow.io/blog/getting-started-with-kubernetes-ingress

https://everythingdevops.webflow.io/blog/helm-charts-deep-dive-for-advanced-users

https://everythingdevops.webflow.io/blog/intro-to-helm-charts-for-complete-beginners

https://everythingdevops.webflow.io/blog/monitoring-observability-and-telemetry-explained

https://everythingdevops.webflow.io/blog/what-is-observability

https://everythingdevops.webflow.io/blog/kubernetes-with-opentofu-a-guide-to-being-fully-open-source

https://everythingdevops.webflow.io/blog/linux-text-processing-commands

https://everythingdevops.webflow.io/blog/troubleshooting-a-kubernetes-cluster-with-ai

https://everythingdevops.webflow.io/blog/backup-kubernetes-etcd-data

https://everythingdevops.webflow.io/blog/securing-your-kubernetes-environment-a-comprehensive-guide-to-server-and-client-certificates-in-kubernetes

https://everythingdevops.webflow.io/blog/optimize-aws-storage-costs-with-amazon-s3-lifecycle-configurations

https://everythingdevops.webflow.io/blog/deploying-a-database-cluster-on-digitalocean-using-pulumi

https://everythingdevops.webflow.io/blog/upgrading-your-eks-cluster-from-1-22-to-1-23-a-step-by-step-guide

https://everythingdevops.webflow.io/blog/understanding-docker-architecture-a-beginners-guide-to-how-docker-works

https://everythingdevops.webflow.io/blog/understanding-the-kubernetes-api-objects-and-how-they-work

https://everythingdevops.webflow.io/blog/a-brief-history-of-devops-and-its-impact-on-software-development

https://everythingdevops.webflow.io/blog/how-to-set-up-a-linux-os-ubuntu-on-windows-using-virtualbox-and-vagrant

https://everythingdevops.webflow.io/blog/how-to-restart-kubernetes-pods-with-kubectl

https://everythingdevops.webflow.io/blog/how-to-deploy-a-multi-container-docker-compose-application-on-amazon-ec2

https://everythingdevops.webflow.io/blog/how-to-set-environment-variables-on-a-linux-machine

https://everythingdevops.webflow.io/blog/how-to-avoid-merge-commits-when-syncing-a-fork

https://everythingdevops.webflow.io/blog/building-x86-images-on-an-apple-m1-chip

https://everythingdevops.webflow.io/blog/kubernetes-architecture-explained-worker-nodes-in-a-cluster

https://everythingdevops.webflow.io/blog/how-to-run-minikube-on-apple-m1-chip-without-docker-desktop

https://everythingdevops.webflow.io/blog/persisting-data-in-kubernetes-with-volumes

https://everythingdevops.webflow.io/blog/how-to-create-and-apply-a-git-patch-with-git-diff-and-git-apply-commands

https://everythingdevops.webflow.io/blog/how-to-schedule-future-processes-in-linux-using-at

https://everythingdevops.webflow.io/blog/how-to-schedule-a-periodic-task-with-cron

https://everythingdevops.webflow.io/blog/automating-dependency-updates-for-docker-projects

https://everythingdevops.webflow.io/blog/linux-background-and-foreground-process-management

Read More

Divine Odazie

19 Jan, 2025

.png)

.png)

.jpeg)

.jpeg)

.png)

.png)